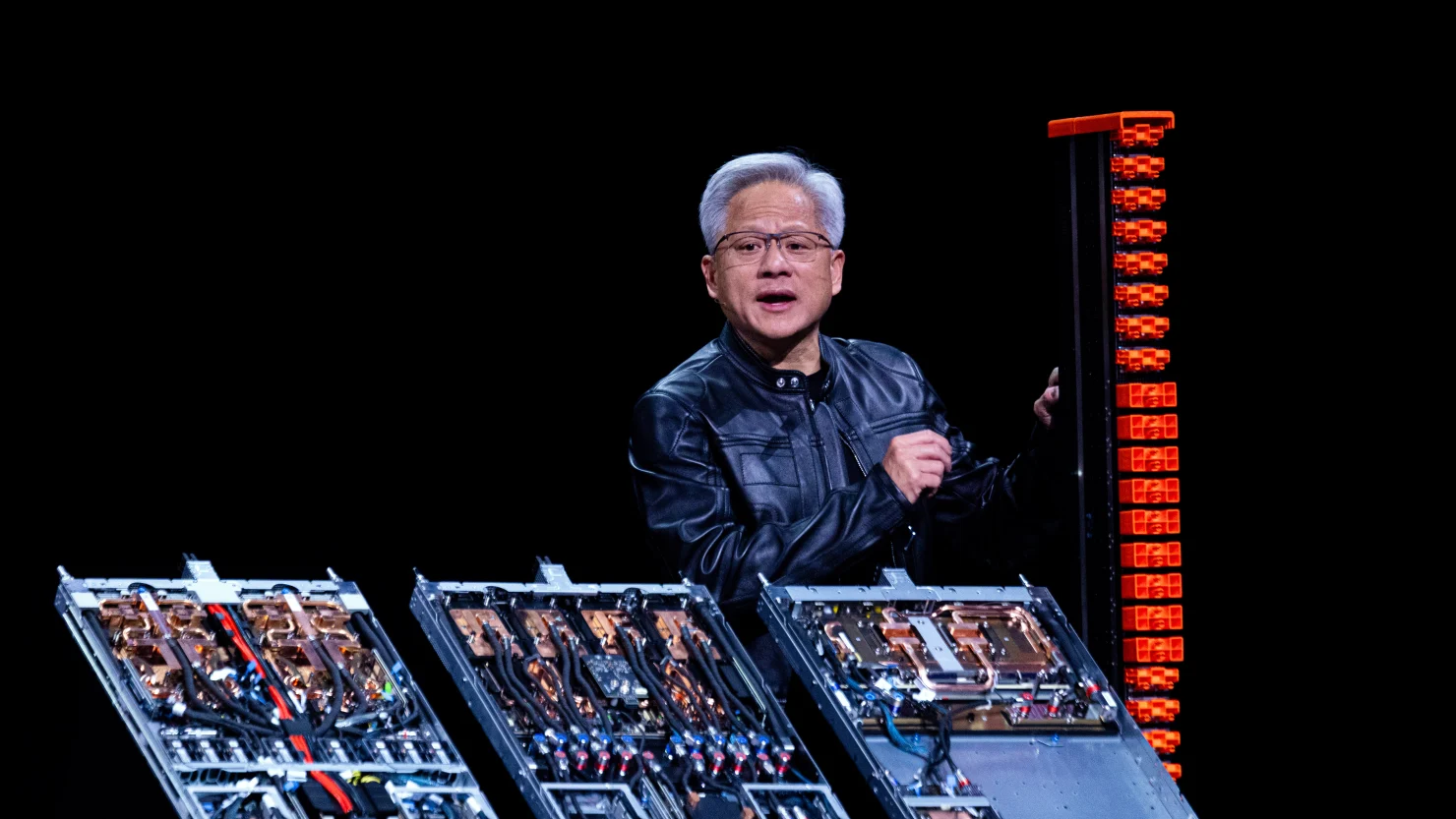

Nvidia CEO Jensen Huang unveiled several groundbreaking developments aimed at reinforcing the company’s central role in artificial intelligence and computing during Computex 2025 in Taiwan. Among the most significant announcements was the introduction of “NVLink Fusion,” a new initiative that opens up Nvidia’s proprietary NVLink technology to non-Nvidia CPUs and GPUs. This move marks a shift from the company’s previous strategy, where NVLink was exclusively used with Nvidia-designed chips.

NVLink is a high-speed interconnect developed by Nvidia to enable seamless communication between processors in AI and high-performance computing systems. With NVLink Fusion, customers and partners can now build semi-custom AI infrastructures that integrate Nvidia GPUs with a broader range of third-party processors, including CPUs and application-specific integrated circuits (ASICs). This development provides increased flexibility for data center design and fosters collaboration between Nvidia and other chipmakers.

Several industry players have already joined the NVLink Fusion ecosystem. Partners include MediaTek, Marvell, Alchip, Astera Labs, Synopsys, and Cadence. Nvidia also noted that customers such as Fujitsu and Qualcomm Technologies will now be able to link their own processors with Nvidia’s GPUs in AI data centers. The strategy allows Nvidia to penetrate AI systems not entirely based on its own chips, increasing its presence in a space traditionally shared with competitors.

Analysts see NVLink Fusion as a smart strategy to capture a share of data centers based on ASICs, offering Nvidia an opportunity to remain central even as competitors like Google, Microsoft, Amazon, and others invest in their own custom AI processors. Although allowing non-Nvidia processors could reduce demand for Nvidia’s own CPUs, it may ultimately strengthen its GPU dominance by enhancing overall system flexibility and competitiveness.

Huang also provided updates on the upcoming Grace Blackwell GB300 system, which is expected to deliver increased performance for AI workloads and will launch in the third quarter of the year. Additionally, Nvidia introduced the NVIDIA DGX Cloud Lepton platform, designed to connect developers with large-scale GPU resources from a global network of cloud providers. This platform aims to streamline access to high-performance AI computing power across various services and regions.

Lastly, Nvidia announced plans to expand in Taiwan with a new office and an AI supercomputer project in partnership with Foxconn. The initiative aims to support Taiwan’s innovation ecosystem, including collaborations with industry leaders like TSMC, in advancing AI and robotics.

READ MORE: